✅ This article is a compilation of research reviews for study purposes. It was not written for commercial purposes. If there are any issues, it will be deleted.

✅ It's written in English, so if you need it, click the translate button and read it.

1. Introduction

Background & Problem Raising

Recently, the performance of AI model(especially Deep Learning model) has attracted significant attention. Engineers across diverse fields such as healthcare, agriculture, and the arts have made rapid progress by leveraging stronger AI models. The medical domain is no exception.

However, there remains a tension between clinicians and engineers rooted in trust. Although it usually seems that the deep learning model show more precise diagnosis performance than clinician, deep learning model is used in a limited capacity in medical sites because of black box model. So, “Explainable and trustful AI” is very essential point in real medical sites.

Limitation of original method

Post-hoc analysis

Some way such as “Class Activation Mapping(called by CAM) helps to analyse the result after finishing predicted by AI model, but it can be wrong with clinician’s decision. Lots of people point it up that it can lead to “inflated trust”.

Necessary of human-being analysis

Almost people are good at understanding something through “example-base explanations”. So Case-Based Reasoning(CBR) which is reflected in the explanations has the advantage of being intuitive as an explanatory model.

Goal and Contribution of ContrastDiagnosis

Keystone idea

We model the clinical reasoning process as an explainable method via CBR built on contrastive learning. We call this framework ContrastDiagnosis.

Combination of Transparency and Post-hoc analysis

Contrastive learning ensures inherent transparency, while simultaneously enhancing explanatory power by incorporating post-hoc explainability through similar region emphasis.

Achieving both performance and interpretability

A key contribution is that, unlike existing black-box models, it achieves high diagnostic accuracy (AUC 0.977) while maintaining high transparency and explainability.

2. Method

The architecture of the proposed ContrastDiagnosis is illustrated in Fig. 1.

Training

Based on the Siamese architecture*, the two subnetworks use the same U-Net-like structure (encoder‑decoder).

A Siamese network is a structure composed of two (or more) neural networks that share the same weights (Shared-Weight Networks). It receives different inputs, extracts each latent representation (embedding), and calculates the similarity (distance) between these embeddings to learn how similar the two inputs are. In other words, it operates by replicating the same network, processing pairs of inputs in parallel, and comparing the resulting vectors. It is mainly used for facial recognition (same person/different person discrimination), text similarity measurement, and retrieving similar lesion cases in medical images.

Siamese network는 두 개(또는 그 이상의) 동일한 가중치를 공유하는 신경망(Shared-Weight Networks) 으로 구성된 구조로, 서로 다른 입력을 받아 각각의 latent representation(임베딩) 을 추출한 뒤, 이 임베딩 간의 유사도(거리) 를 계산하여 두 입력이 얼마나 비슷한지 학습하는 네트워크이다. 즉, 같은 네트워크를 복제해서 입력 쌍을 병렬로 처리한 뒤, 결과 벡터를 비교하는 방식으로 동작하며, 주로 얼굴 인식(같은 사람/다른 사람 판별), 텍스트 유사도 측정, 의료 영상에서의 병변 유사 사례 검색 등에 활용된다.

U-Net architecture’ S

This network includes an ‘attention’ function through segmentation of lung nodules, rather than simple classification.

S(I;θ):=D(E(A(I);θe);θd)The formula immediately above the text defines S of the U-Net architecture. E and D in this formula denote the encoder and decoder of U-Net, with θe and θd representing the weight for the encoder and decoder, respectively. I is the input images and A denotes online data augmentation.

loss function

Firstly, We have to check entire architecture of loss function. The loss function for training the ContrastDiagnosis consists of “contrastive loss(lc)” and “segmentation loss(ls)”. lc forces embeddings to separate benign vs. malignant in latent space. ls forces embeddings to fucus on the nodule region instead of background.

Two inputs I(1) and I(2) are first augmented Aug()*, then passed through the encoder E to obtain latent codes(embedding) c(k) ∈ R^n.

Each branch also predicts a segmentation mask ~M(k), compared against the ground-truth M(k) using Dice + Cross-Entropy*. ω balances the two tasks. If segmentations loss is too weak, embeddings may ignore lesion cues. If too storng, classification/contrastive signal is underweighted.

Dice + Cross-Entropy (DiceCE) loss is a commonly used composite loss function in medical image segmentation. Cross-Entropy (CE) encourages the model to correctly classify each pixel (e.g., lesion vs. background) with probabilistic accuracy, while Dice loss directly optimizes the overlap between the predicted mask and the ground-truth mask, making it effective for small lesions or highly imbalanced classes. However, using CE alone may cause small lesions to be overlooked, and using Dice alone can lead to unstable training. By combining the two, DiceCE achieves both accurate pixel-wise classification and robust overall mask overlap optimization.

Dice + Cross-Entropy(DiceCE) 손실은 의료 영상에서 자주 쓰이는 복합 손실 함수이다. **Cross-Entropy(CE)**는 각 픽셀 단위에서 정답 클래스(예: 병변 vs. 배경)를 확률적으로 맞히도록 학습시키는 데 강점이 있고, Dice 손실은 예측 마스크와 정답 마스크가 얼마나 겹치는지(Overlap)를 직접 최적화해 작은 병변이나 클래스 불균형 상황에서도 잘 작동한다. 하지만 CE만 쓰면 작은 병변을 놓치기 쉽고, Dice만 쓰면 학습이 불안정해질 수 있어서 두 가지를 합친 DiceCE를 쓰면 정확한 픽셀 단위 분류 능력과 전반적인 마스크 겹침 최적화를 동시에 확보할 수 있다.

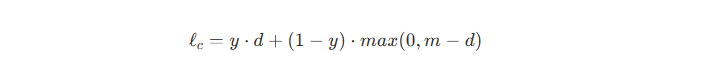

Contrastive loss _ ℓc

y is the label whether two input image belong the same class (benign/malignant), 0 otherwise, m is the margin hyper parameter that enforces a minimum separation for negative pairs. The distance d can be obtained by the ‘AlignDist’ module which is defined below🔽. If y = 1 (I mean positive pair), ℓc is d. Loss decreases if distance is small. If y = 0 (I mean negative pair), ℓc is max(0, m - d). Penalty only if d < m. If already beyond margin, no further push.

AlignDist: “distance alignment”

The distance “d” can be obtained by the ‘AlignDist’ module which is define above. D( ⋅ , ⋅ ) is the distance metric. A and b denote the weight and bias of linear transformation, respectively.

Inference

After training, the model uses case-based reasoning (CBR). In other words, it asks, "What past instances of this nodule are similar to this nodule?" and then makes a diagnosis based on those instances.

Step-by-step process

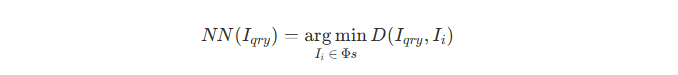

- A new query image I_qry is passed through the network to obtain its latent representation.

- This representation is compared against all instances in the support dataset(Φ_s, consisting of training + validation samples).

- Using a distance metric, the k nearest neighbors to I_qry are identified by k-Nearest Neighbors(k-NN) algorithm.

- The class labels of these k neighbors are then aggregated by majority voting to predict the label (benign vs. malignant) for I_qry.

- The hyperparameter k is optimized on the validation set.

Advantage

Instead of only outputting a binary prediction, the model provides reference cases (nearest neighbors) as evidence for its decision. This offers explainability, making the decision process more interpretable and aligned with how radiologists reason using past similar cases.

Explainability

1. Transaprency through Case-based Resoning(CBR)

Since ContrastDiagnosis is built upon Case-Based Reasoning(CBR), the model inherently provides strong transparency. When classifying a new nodule, the system presents similar past instance (via k-NN), enabling clinicians to intuitively understand the rationale behind the model’s decision.

2. Siamese Local-CAM

Beyond CBR, the framework incorporates post-hoc interpretability through Siamese Local-CAM.

- While convetional Grad-CAM highlights broader regions, Local-CAM is specifically designed to capture fine-grain regions, which is crucial for small lesions such as lung nodules.

- By integrating Local-CAM into the Siamese architecture, the system highlights the regions that directly influence the similarity/distance judgement between a query and support image.

Gradients are back-propagated from the distance d. The computation process can be as below.

and w_k is defined as below.

This process computes a weight(w_k) for each channel activation A_k, reflecting how much that location contributes to the final distance d. We use this w_k to calculate L_t which means attention map of target layer t. And w_(t-1) denotes the weight tensor fo upper-level target layer (t - 1). A_k(x,y,z) and (x,y,z) denotes te activation map of k-th channel and 3D spatial positions, respectively. d denotes distance of Siamese network and A_k(i,j,d) denotes voxel located at (i, j, d) position of A_k.

Confidence Score

To complement visualization, the framework introduces a confidence score. It computes the distribution of distances among correctly matched pairs in the support set and sets a 95% confidence interval as a threshold.

3. Experiments and Results

In this study, Contrastdiagnosis was evaluated on the public LIDC/IDRI dataset, which consist of lung CT scans collected from seven academic institutions. A total of 1,226 lung nodules were included, with 980 used for training and 246 reserved for independent testing. Nodules were labeled malignant if their manual radiologist score is higher than 3, and benign otherwise. For preprocessing, 3D CT volumes were cropped into local patches of size [64,64,32] around each nodule. To improve generalization, extensive data augmentation was applied with 80% probability, including 90-degree rotations, horizontal and vertical flipping, contrast adjustment, affine transformations, and Gaussian nosie.

The model was trained using the L2 (Euclidean) distance metric, with the loss weight ω set to 1.5. Optimization was performed with the Adam optimizer, an initial learning rate of 0.001, and Stochastic Gradient Descent with Warm Restarts(SGDR) for scheduling. For comparison, the diagnosis accuracy of ContrastDiagnosis was benchmarked against several traditional supervised lung nodule classification methods, showing competitive performance across metrics.

Qualitative analyses were also conducted. Figure 2 illustrated prediction results, where each query nodule was paired with its top six most similar support cases, along with a similarity indicator that reflected the confidence of each prediction. Both correct and incorrect classifications were displayed. Figure 3 further visualized the contrastive regions identified by Siamese Local-CAM for selected query-support pairs. In each case, the most representative slice of the 3D patch was shown, with yellow outlines highlighting the fine-grain regions that contributed most to the similarity judgment. Additional visual examples were provided in the supplementary material.

4. Discussion and Conclusion

Diagnostic Performance

ContrastDiangnosis achieved top-tier diagnostic accuracy compared to other supervised classification approaches. In particular, the F1 score highlights its balancedperformance across both benign and malignant classes.

Limitations of Case-Based Reasoning (CBR)

A key limitation of the CBR approach lies in the restricted diversity of the support set. As illustrated in Case 2 (Fig. 2), the model can correctly classify a query while still selecting cases that are not visually similar. The authors attribute the high overall accuracy to the use of contractive learning, which produces a smoother and more consistent latent space for representation.

Enhancing Interpretability

Similar case retrieval enables clinicians to intuitively understand the model’s decision-making process. And similarity indicators, derived from the distribution of distances within the support set, provide a confidence measure for each prediction. Siamese Local-CAM visualizes the discriminative regions that contribute to the similarity judgment between query and support cases. This allows clinicians not only to interpret but also to verify or challenge the model’s conclusions, as demonstrated in Case 5.

Conclusion

The authors propose ContrastDiagnosis as a straightforward yet powerful framework for interpretable diagnostics. By leveraging contrastive learning, the model builds a transparent case-based reasoning system. Furthermore, interpretability is strengthened through post-hoc explanations such as activation-map visualizations and confidence scoring, which together allow clinicians to gain deeper and more intuitive insights into the AI model’s decision-making process.

댓글